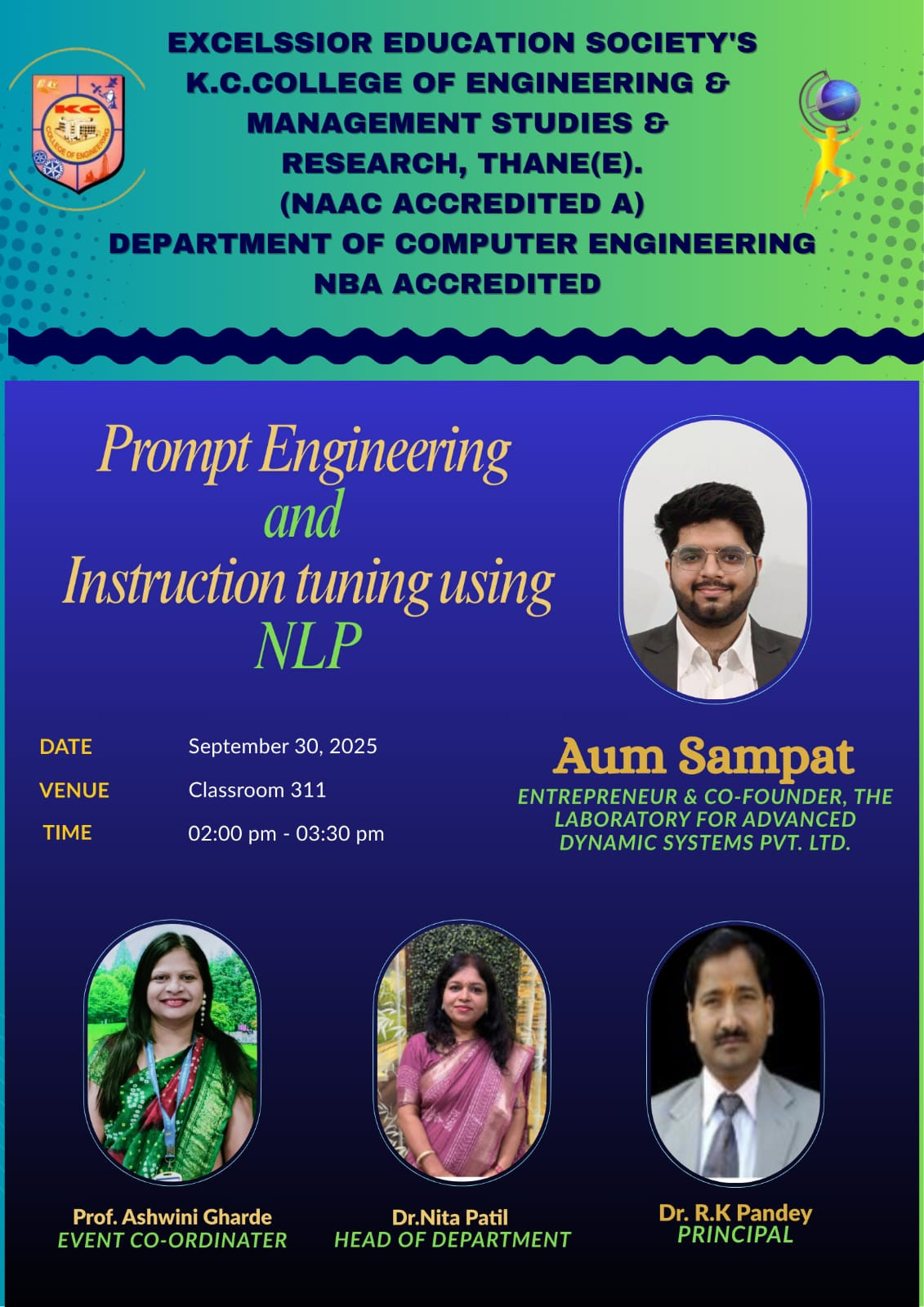

Prompt Engineering and Instruction Tuning Using NLP

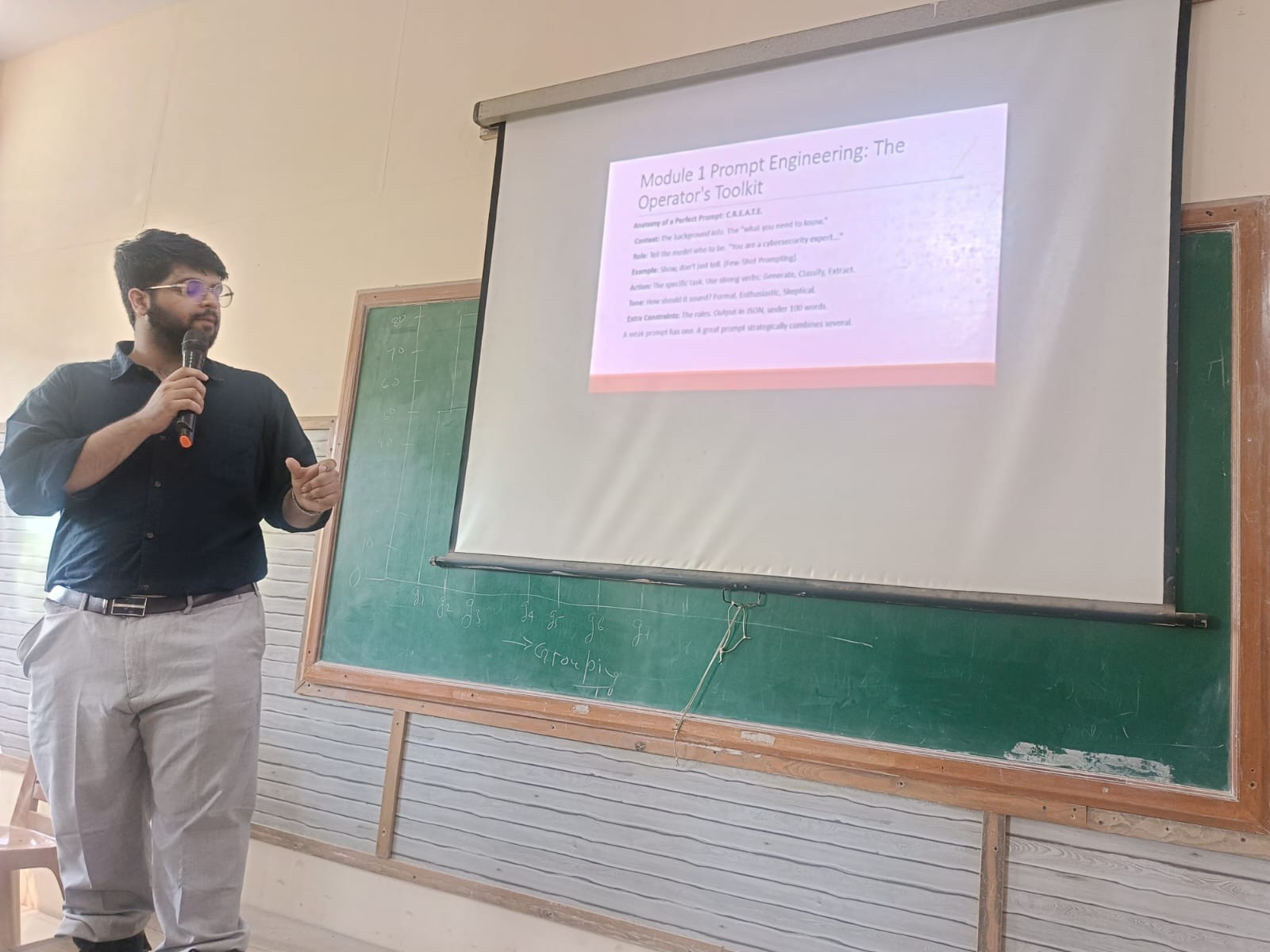

The guest lecture on Prompt Engineering and Instruction Tuning using NLP will provide participants with an understanding of how natural language prompts shape the performance of large language models (LLMs). The session will begin with an introduction to the fundamentals of NLP and the rise of generative AI, followed by a detailed explanation of prompt engineering concepts and techniques.Participants will learn how effective prompt design can improve model accuracy, relevance, and creativity in tasks such as question answering, summarization, translation, and conversational agents. Different prompting strategies—such as zero-shot, few-shot, chain-of-thought prompting, and role-based prompting—will be explored with practical examples.The session will also highlight challenges in prompt engineering, including bias, ambiguity, and ethical concerns, and discuss future directions such as instruction tuning and alignment methods. By the end of the lecture, attendees will gain both theoretical insights and practical knowledge to design, refine, and experiment with prompts for diverse NLP applications.